Introduction

I recently finished reworking my selfhosted setup from scratch. I have been selfhosting since 2017. First, on an old netbook, that was otherwise collecting dust, and then on a dedicated mini-PC that I built to replace it. However, as a typical selfhoster, one day I decided that I wanted more. So, I built another, more powerful, server. Though, I am sure some selfhosters would still consider it mini.

Since I was assembling a new machine from scratch, I also decided that rather than migrating my fragile Docker Compose setup over to it, I would rebuild it entirely from scratch, with all the lessons I learned along the way. As a result, some of the major changes I made to my setup are:

- I added a VPS to serve as an external proxy and to host services that cannot suffer as much downtime while I am playing around with (and breaking) the main server, such as this website with the blog that you are currently reading,

- Everything must be deployed with an Ansible playbook for reproducibility,

- I migrated all containerised services from rootful Docker to Podman running rootless with each service running as a separate user,

- I migrated from Docker Compose to SystemD for managing containerised services,

- I significantly improved my backup infrastructure such that backups are now more frequent, do not cause downtime, and are easily testable.

The combination of running all services in rootless containers as separate users spread over two hosts, a VPS in the cloud and a physical machine at my home, introduced an interesting challenge for networking and this will be the subject of this brief blog post series.

It will be split into three parts:

- Part 1: Rootless Podman and SystemD

In the first part of this series, I will explain how to run rootless Podman containers and manage them with SystemD. I will show how to network related containers within a pod and how to ensure they remain up to date. - Part 2: Bridging containers

Networking containers on the same host almost always involves a bridge. In this part I explain how to set up a bridge on the host and connect rootless containers belonging to different rootless users. - Part 3: Connecting two bridges with WireGuard

In my setup, each of the hosts runs its own containers connected via their host’s bridge. The last piece to network all the containers together is a WireGuard VPN tunnel that allows containers of one host to talk to containers of the other host.

I will be adding links to the relevant posts as I complete them. If any links are missing that means I am still working on those parts.

The problem

People have been networking containers for years now. Why am I making it sound so complicated? There are two main challenges that I had to overcome to connect all my services to each other:

- All services are run with Podman as rootless containers by different non-root users.

- The services are spread over two hosts in two different physical locations.

Rootless services

For security reasons I wanted to move away from running rootful Docker to rootless Podman. Since Podman let me run my services as a non-root user, I also leveraged this to run each service as a different user. Similar to how www-data is the typical system user for running a web server, I wanted a different system user for each of my services. Whilst running rootless containers is not necessarily more secure than running containers as root, I still like having each service running as a different user for system administration purposes.

This, however, creates a challenge. Rootless networking between containers with Podman is not trivial. The easiest way to connect rootless containers to each other is to run them in the same pod. Whilst I do make heavy use of this feature to connect containers that combine together to offer a single external-facing service, e.g., for connecting a Nextcloud container to a Redis container, it does not make much sense to put containers from independent services into the same pod. However, even if I wanted to do that (and it was possible to share pods between different rootless users), running containers in the same pod means that they share various namespaces which goes against my goal of isolating services by running them as separate system users.

Therefore, the remaining option for connecting the different services is to connect them somehow via the container host.

Services on two hosts

I decided to rent a VPS in addition to running my own physical machine for two reasons. First, for security reasons the VPS serves as the entrypoint to my services from the outside world. This means that I do not need to open any ports on my home router nor publish my home IP address in any DNS service. Second, it allows me to run services with higher uptime requirements (such as this website) without having to disrupt them when I want to tinker with my server at home on which I mostly run services for my own personal use.

This creates the second challenge. As described above, it is already difficult to connect rootless containers on a single host and now I want to connect rootless containers running on two separate hosts. If both hosts were publicly exposed that would have been straightforward - the containers could use each others’ hosts’ public addresses. However, one of the major reasons for using two hosts is precisely to not expose my home server to the outside world. As such, the home server does not have its own public IP address.

Being able to communicate between containers running on two hosts is a requirement for running a containerised and rootless reverse proxy. I want to connect to all my services on port 443 and so a reverse proxy which can redirect connections based on domain name alone is a must. However, exposing a single port on a single IP address means that one reverse proxy must be able to connect to any service regardless of whether it runs on the same host or on the remote host. Therefore, I had to come up with some solution to network the different services in a non-public way.

The solution

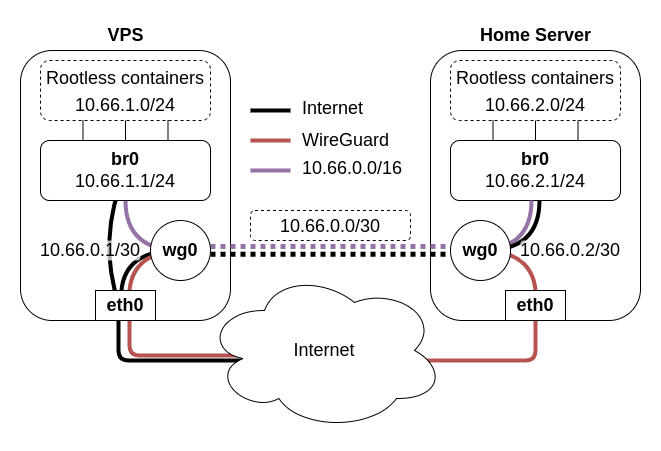

The diagram below summarises the full solution to the challenges I outlined above.

The rootless containers communicate with other containers on the same host via a shared bridge, br0, which is created and managed by the root user. To connect to the containers on the other host, routing table rules direct traffic between the two bridges via a WireGuard tunnel, wg0. Additionally, the routing table rules are set up such that all outgoing connections from the home server containers are rerouted to via the VPS to disguise the fact that they are actually running at home.

In the rest of this blog series, I will show how to set this network up step by step.

Ansible Edda

The blog posts are all based on my own selhosted deployment. If you are interested in having a look at it, I maintain and document my Ansible playbooks in a repository that I keep on, you guessed it, the selfhosted server itself: https://git.thenineworlds.net/the-nine-worlds/ansible-edda.

DISCLAIMER: Whilst I made the repository public, it is not meant to be used directly by anybody else for their own selhosted setups. I am sharing the repository with the intention of it being used as a reference and nothing more. Perhaps, you can find some useful snippets and solutions that you may want to adapt for your own playbooks.

DISCLAIMER: It may be that whilst you can connect to my website and read this blog the repository may be temporarily offline. The website with the blog are hosted on the VPS, which I try to keep online as much as possible, but the git repository is hosted on the machine at home, which I may be tinkering with at any time. If you find the repository offline, please try again in a few minutes, a few hours, or a few days.

Next: Rootless Podman and SystemD

Continue to the next part of this series: Part 1: Rootless Podman and SystemD (TBA).